PixVerse is betting that the next major inflection point in generative video will not be visual fidelity alone, but real-time control. As interactive AI video moves from passive generation to continuous user steering, YourNewsClub views the company’s latest release as a structural shift in how video content is created, consumed, and monetized.

Unlike traditional text-to-video systems that operate in discrete steps, PixVerse allows users to direct characters mid-scene – altering motion, emotion, and posture as the video unfolds. This collapses the delay between intention and output, transforming video creation into a live process rather than a queued task. From an industry perspective, this reduces friction not just for creators, but for entire distribution pipelines built around speed and iteration.

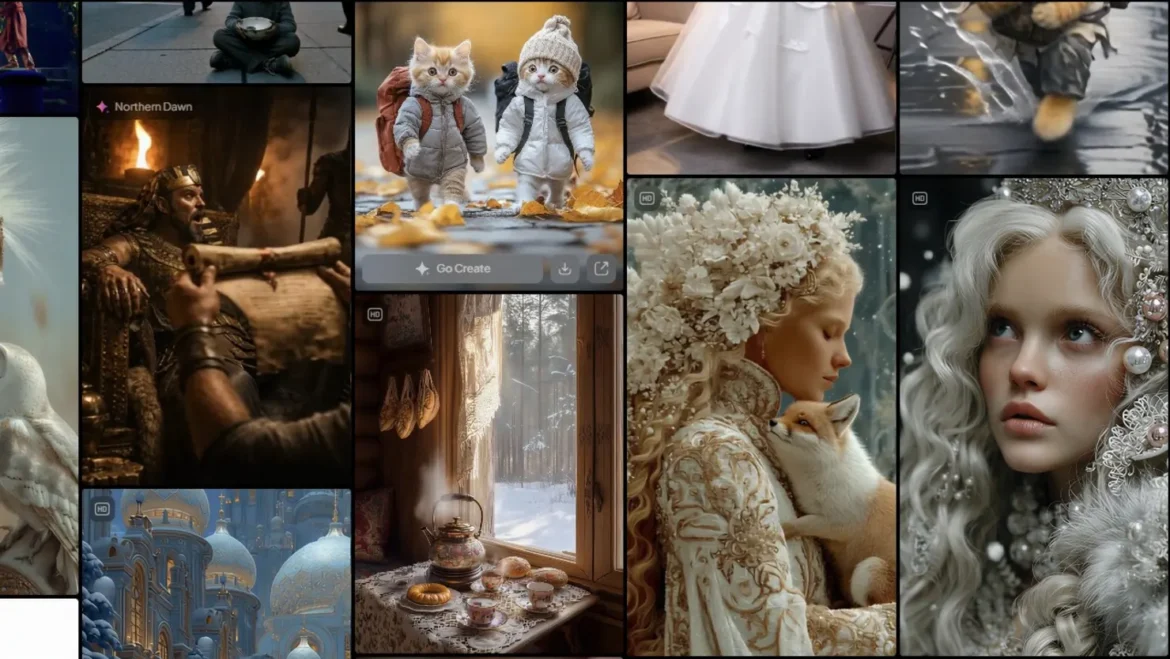

The strategic logic extends beyond tooling. PixVerse has embedded its real-time generation inside a social-style platform, signaling that engagement loops – not standalone renders – are the core asset. YourNewsClub interprets this as a deliberate attempt to own usage behavior rather than compete solely on model benchmarks. In generative media, habit formation often outperforms raw technical superiority.

Funding momentum and user growth targets reinforce this positioning. The company’s aggressive expansion plans suggest confidence that real-time interaction will unlock new formats, from branching micro-dramas to endlessly adaptive gaming experiences. This aligns with a broader pattern in AI markets where control, not novelty, becomes the decisive differentiator once baseline quality converges. According to Owen Radner, analyst at YourNewsClub specializing in digital infrastructure and computational scaling, real-time video exposes weaknesses that offline generation can hide. “Interactive generation stresses bandwidth, GPU scheduling, and inference efficiency simultaneously,” he notes. “Only platforms built for sustained load – not demos – will scale without collapsing margins.” From this lens, PixVerse’s challenge is as much operational as it is creative.

There are also governance implications. Maya Renn, an analyst focused on AI ethics and access to technological power, argues that real-time generation compresses accountability timelines. “When creation happens instantly, moderation and provenance can’t be bolted on later,” she says. “They must be native to the system.” YourNewsClub agrees that watermarking, action limits, and audit trails will determine whether interactive video earns institutional trust.

The competitive landscape is fragmenting. Premium cinematic models and high-throughput creator engines are diverging into separate markets, each with distinct economics. PixVerse appears firmly aligned with the latter, prioritizing speed, accessibility, and volume over cinematic perfection. This positions it well for social platforms, marketing content, and user-driven entertainment – but only if infrastructure and safeguards keep pace.

For creators, real-time video should be treated as a prototyping and engagement layer rather than a final production endpoint. For platforms, governance must evolve alongside capability. And for investors, the signal to watch is not virality, but retention under load.

As Your News Club concludes, the future of AI video will be decided less by how impressive a single clip looks – and more by who controls the session, the workflow, and the trust envelope around it.